I am currently a Ph.D candidate at TsinghuaNLP supervised by Prof. Maosong Sun. Before this, I obtained my bachelor degree at CST@THU.

My current research interest lies in efficient method of LLM. Specifically, I am interested in how to make the training and inference process more efficient and how to better understand LLM’s training dynamics. During my PhD, I have interned at ByteDance Seed-LLM to make research about LLM Pretrain. I have published some papers at the top international conferences with total

🔥 News

- 2024.07: 🎉 Our work about training dynamics is accepted by COLM 2024. See you in Philadelphia!

- 2024.05: 🎉 One first-author paper (FastFiD) is accepted by ACL 2024. See you in Thailand!

- 2024.02: 🎉 We release an elegant work on training dynamics.

- 2023.04: 🎉 Successfully passed my PhD proposal defense and become a PhD candidate!

📝 Publications

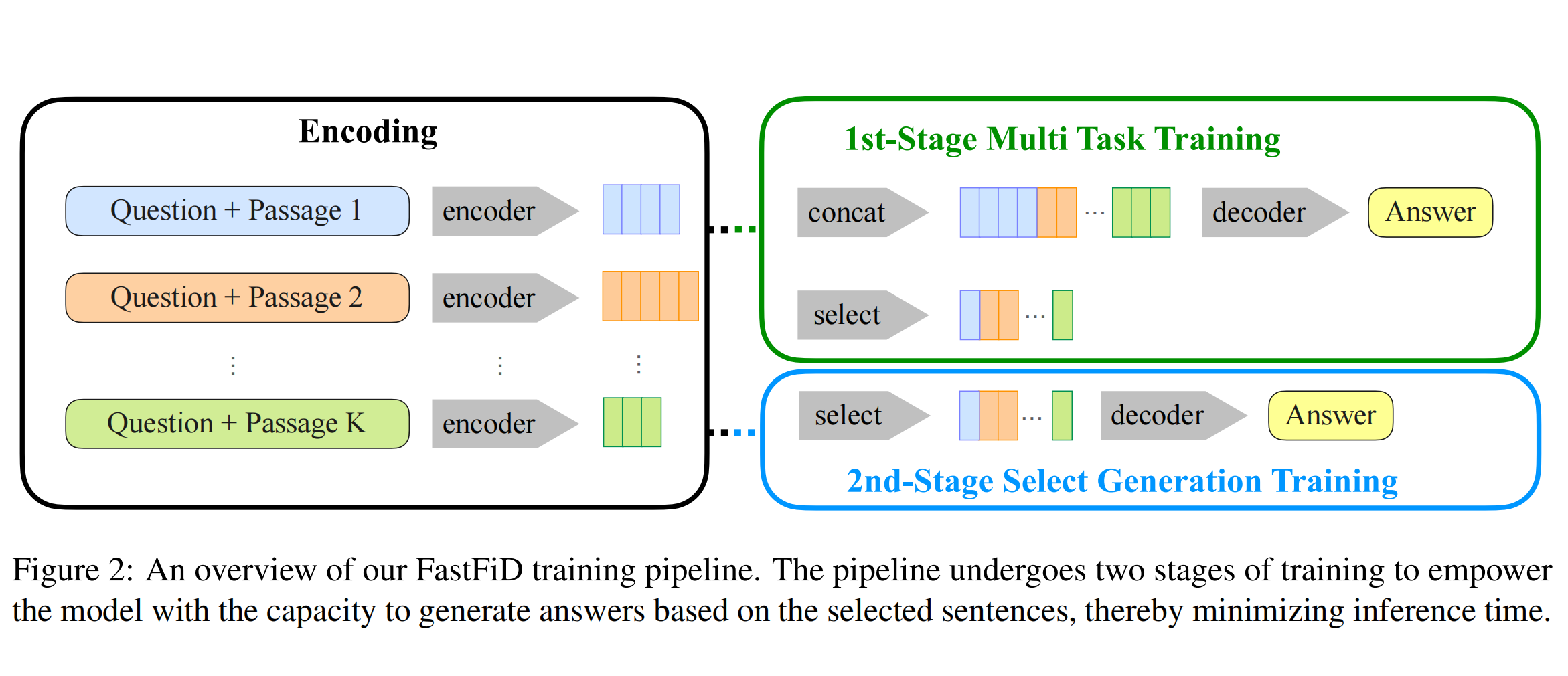

FastFiD: Improve Inference Efficiency of Open Domain Question Answering via Sentence Selection

Project |

Yufei Huang, Xu Han, Maosong Sun

- We propose a two-stage training method called FastFiD to address the inference efficiency problem in RAG system. With FastFiD, we can enhance inference speed by 2.3X-5.7X while maintaining performance on three commonly used datasets (NQ, TriviaQA, and ASQA).

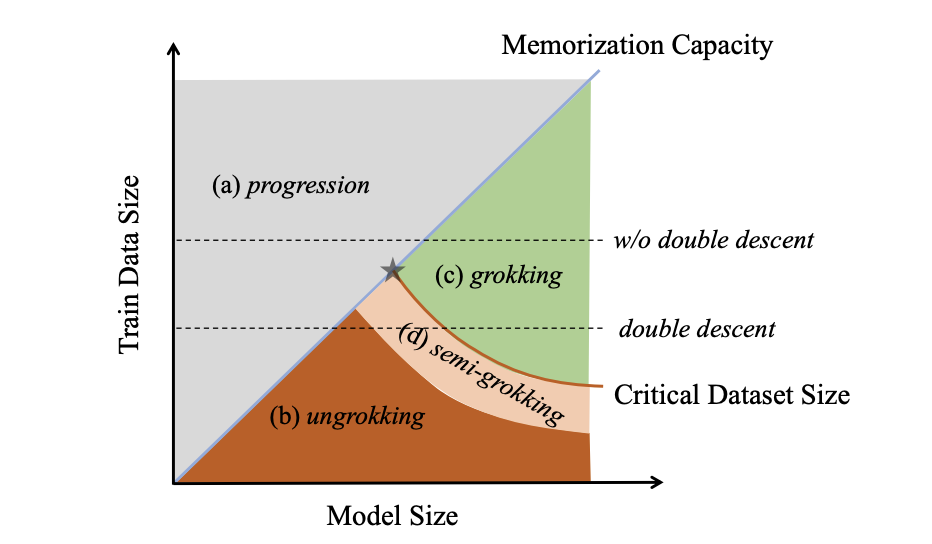

Yufei Huang, Shengding Hu, Xu Han, Zhiyuan Liu, Maosong Sun

-

-

We propose a comprehensive framework to provide a unified view of grokking, double descent and emergent abilities, focusing on the competition between memorization and generalization circuis in neural network.

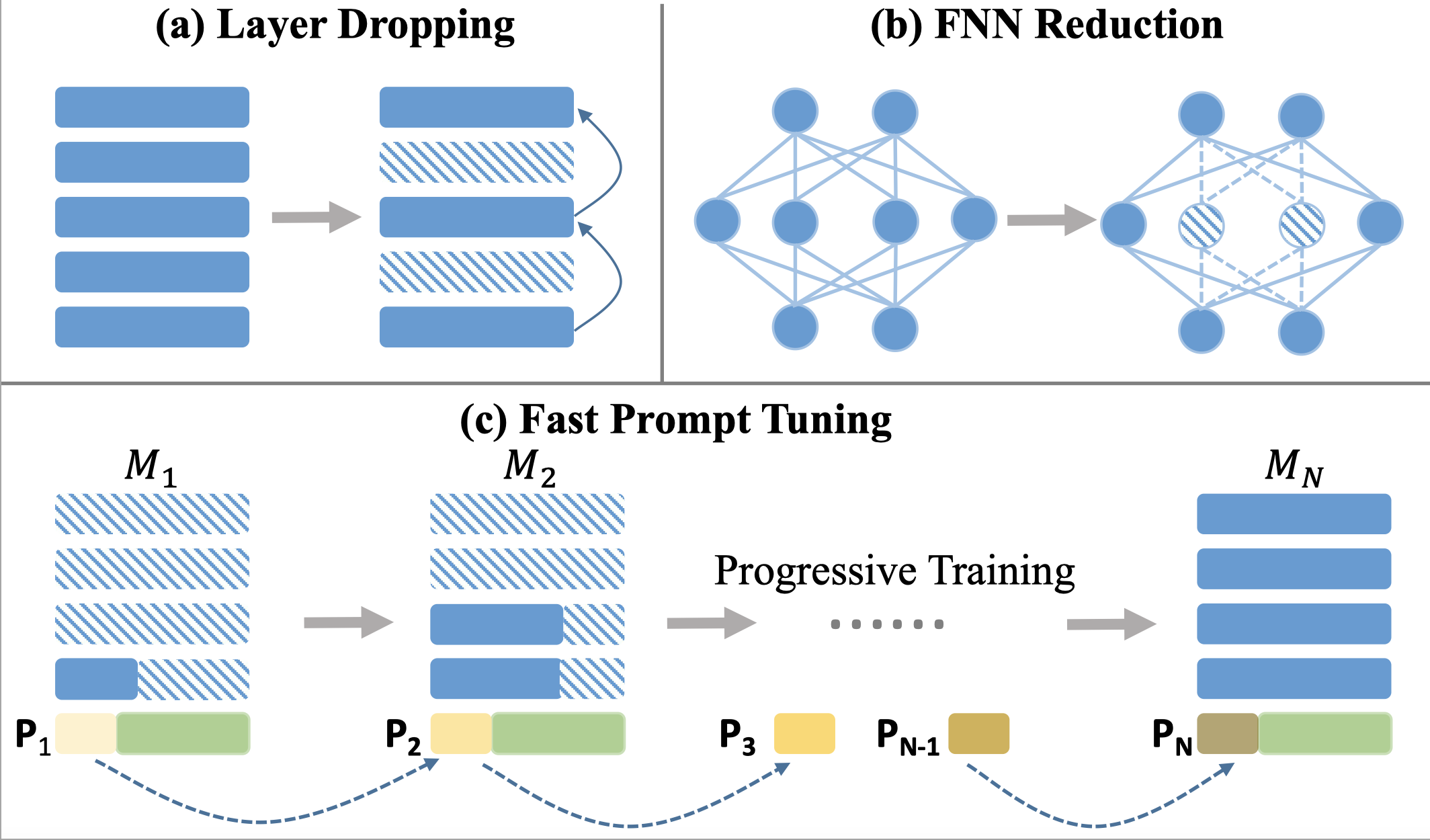

FPT: Improving Prompt Tuning Efficiency via Progressive Training

Yufei Huang*, Yujia Qin*, Huadong Wang, Yichun Yin, Maosong Sun, Zhiyuan Liu, Qun Liu

Project |

- In this work, we proppose Fast Prompt Tuning (FPT), which can save over 30% training computations while achieving comparable performance with vanilla Prompt Tuning.

ICLR 2025 Workshop World ModelsACDiT: Interpolating Autoregressive Conditional Modeling and Diffusion Transformer Jinyi Hu*, Shengding Hu*, Yuxuan Song, Yufei Huang, Mingxuan Wang, Hao Zhou, Zhiyuan Liu, Wei-Ying Ma, Maosong Sun |arXiv 2024.09Configurable Foundation Models: Building LLMs from a Modular Perspective Chaojun Xiao, Zhengyan Zhang, Chenyang Song, Dazhi Jiang, Feng Yao, Xu Han, Xiaozhi Wang, Shuo Wang, Yufei Huang, Guanyu Lin, Yingfa Chen, Weilin Zhao, Yuge Tu, Zexuan Zhong, Ao Zhang, Chenglei Si, Khai Hao Moo, Chenyang Zhao, Huimin Chen, Yankai Lin, Zhiyuan Liu, Jingbo Shang, Maosong Sun |ACM Comput. Surv.Tool Learning with Foundation Models Yujia Qin, Shengding Hu, Yankai Lin, Weize Chen, Ning Ding, Ganqu Cui, Zheni Zeng, Yufei Huang, Chaojun Xiao, Chi Han, Yi Ren Fung, Yusheng Su, Huadong Wang, Cheng Qian, Runchu Tian, Kunlun Zhu, Shihao Liang, Xingyu Shen, Bokai Xu, Zhen Zhang, Yining Ye, Bowen Li, Ziwei Tang, Jing Yi, Yuzhang Zhu, Zhenning Dai, Lan Yan, Xin Cong, Yaxi Lu, Weilin Zhao, Yuxiang Huang, Junxi Yan, Xu Han, Xian Sun, Dahai Li, Jason Phang, Cheng Yang, Tongshuang Wu, Heng Ji, Zhiyuan Liu, Maosong Sun |NAACL 2021TR-BERT: Dynamic Token Reduction for Accelerating BERT Inference Deming Ye, Yankai Lin, Yufei Huang, Maosong Sun |CIKM 2019Improving Web Image Search with Contextual Information Xiaohui Xie, Jiaxin Mao, Yiqun Liu, Maarten de Rijke, Qingyao Ai, Yufei Huang, Min Zhang, Shaoming Ma |

💻 Internships

- 2024.06 - 2024.10 ByteDance Seed-LLM, Beijing, China.

- 2023.02 - 2024.02 DeepLang AI, Beijing, China.

🎖 Honors and Awards

- 2023.10 Comprehensive Excellence Scholarship, Department of Computer Science and Technology, Tsinghua University.

- 2021.10 Comprehensive Excellence Scholarship, Department of Computer Science and Technology, Tsinghua University.

- 2020.06 Excellent Graduate (Bachelor), Department of Computer Science and Technology, Tsinghua University.

- 2019.10 Comprehensive Excellence Scholarship, Department of Computer Science and Technology, Tsinghua University.

- 2018.10 China National Scholarship (Top 5% each year).

- 2017.12 Academic Progress Award in Tsinghua University.

- 2016.10 Second-class Scholarship for Freshmen of Tsinghua University (Top 10 in every province).

📖 Educations

- 2023.04 - now, Ph.D Candidate, Department of Computer Science and Technology, Tsinghua University, Beijing.

- 2020.08 - 2023.04, Ph.D Student, Department of Computer Science and Technology, Tsinghua University, Beijing.

- 2016.08 - 2020.06, Undergraduate, Department of Computer Science and Technology, Tsinghua University, Beijing.